Return to main Robotic Perception Research page

24th British Machine Vision Conference (BMVC)

24th British Machine Vision Conference (BMVC)

Axel Furlan, Stephen Miller, Domenico G. Sorrenti, Fei-Fei Li, Silvio Savarese

Abstract

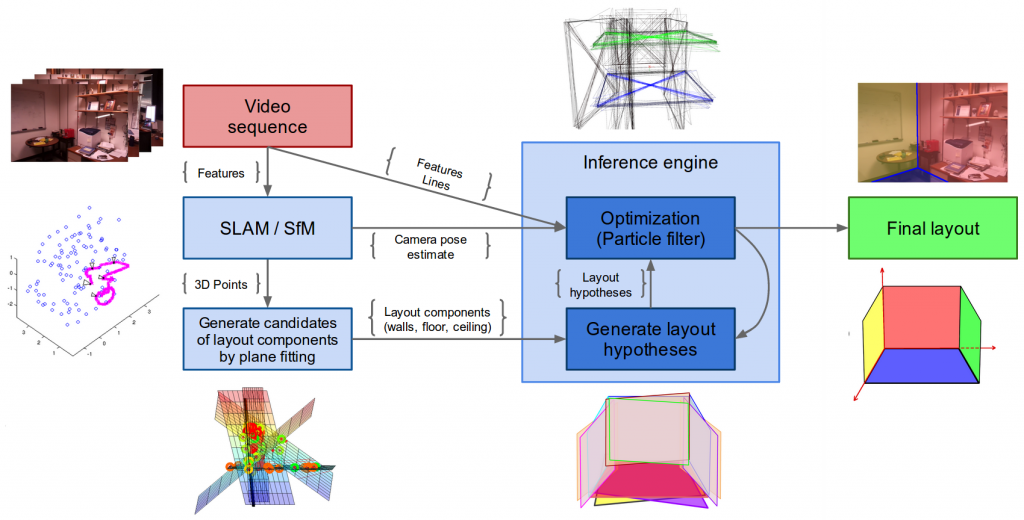

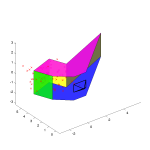

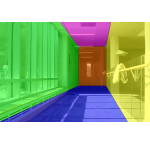

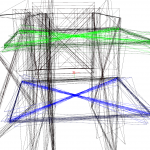

Many works have been presented for indoor scene understanding, yet few of them combine structural reasoning with full motion estimation in a real-time oriented approach. In this work we address the problem of estimating the 3D structural layout of complex and cluttered indoor scenes from monocular video sequences, where the observer can freely move in the surrounding space. We propose an effective probabilistic formulation that allows us to generate, evaluate and optimize layout hypotheses by integrating new image evidence as the observer moves. Compared to state-of-the-art work, our approach makes significantly less limiting hypotheses about the scene and the observer (e.g., Manhattan world assumption, known camera motion). We introduce a new challenging dataset and present an extensive experimental evaluation, which demonstrates that our formulation reaches near-real-time computation time and outperforms state-of-the-art methods while operating in significantly less constrained conditions.

Results

Paper

- The full paper can be downloaded Free your Camera Full Paper (2929 downloads ) .

- The extended abstract can be downloaded Free your Camera Extended Abstract (2630 downloads ) .

- The supplementary material can be downloaded Free your Camera Supplementary Material (2232 downloads ) .

- The poster can be downloaded Free your Camera Poster (2455 downloads ) .

Dataset

With this paper we introduce the Michigan-Milan Indoor Dataset. It consists of 10 sequences in a variety of environments, spanning offices, corridors and large rooms, where the observer freely moves (6DoF) around the scene. Most of the sequences frame ground-walls boundaries for short periods or do not frame them at all; some present scenes that cannot be represented by a simple box layout model or relying on the Manhattan world assumption. All the sequences were collected with common smartphones, in the attempt to provide real-life observing conditions with low-cost sensors.

The dataset can be downloaded Free your Camera Dataset (2051 downloads ) and is organized as follows:

- Folder names are composed by the sequence name and the smartphone type they were collected with.

- Each folder contains a calibration.txt file, which is the output of the Matlab Toolbox Calib calibration procedure.

- Each folder contains a groundtruth.mat file, which is a matlab file containing a matrix of the same size as the images, representing the groundtruth semantic labeling of the first frame in the sequence. Each pixel is an integer corresponding to the following numbering criteria:

| -1 | Ceiling |

| 0 | Floor |

| 1,2,... | Incremental wall numbers, starting from the left |

The groundtruth for the dataset can be downloaded Free your Camera Dataset groundtruth (1155 downloads ) . It is kindly provided by Alejandro Rituerto, who introduced the groundtruth labeling in his work:

A. Rituerto, A.C. Murillo and J.J. Guerrero, “3D layout propagation to improve object recognition in egocentric videos”, in Second Workshop on Assistive Computer Vision and Robotics held with ECCV, 2014

Bibtex

@inproceedings{furlan2013bmvc,

author = {Furlan, Axel and Miller, David and Sorrenti, Domenico G. and Fei-Fei, Li and Savarese, Silvio},

title = {Free your Camera: 3D Indoor Scene Understanding from Arbitrary Camera Motion},

booktitle = {BMVC},

year = {2013}

}